The Foundation of Neural Network Working Principle

Neural networks, once a concept relegated to science fiction, have permeated our daily lives. From voice assistants understanding our commands to self-driving cars navigating busy streets, these intelligent systems are the unseen architects of modern technology. But have you ever wondered how they work? How do they mimic the human brain to make sense of complex data? In this article, we're going to break down the foundation of neural network working principles, demystify the jargon, and provide you with a clear understanding of this cutting-edge technology.

If you are lazy like me, then here is a YouTube video for you.

The Basics: What Are Neural Networks?

Human brain with Neurons

Neural networks, often referred to as artificial neural networks (ANNs), are a subset of machine learning algorithms inspired by the structure and function of the human brain. They're designed to recognize patterns, process information, and make predictions. Neural networks consist of interconnected nodes, akin to neurons in the brain. These nodes, called "artificial neurons" or "perceptrons," work in harmony to perform complex tasks.

Think of it like a puzzle. Each piece of information is a puzzle piece, and the neural network is the master puzzler, piecing them together to create a meaningful picture.

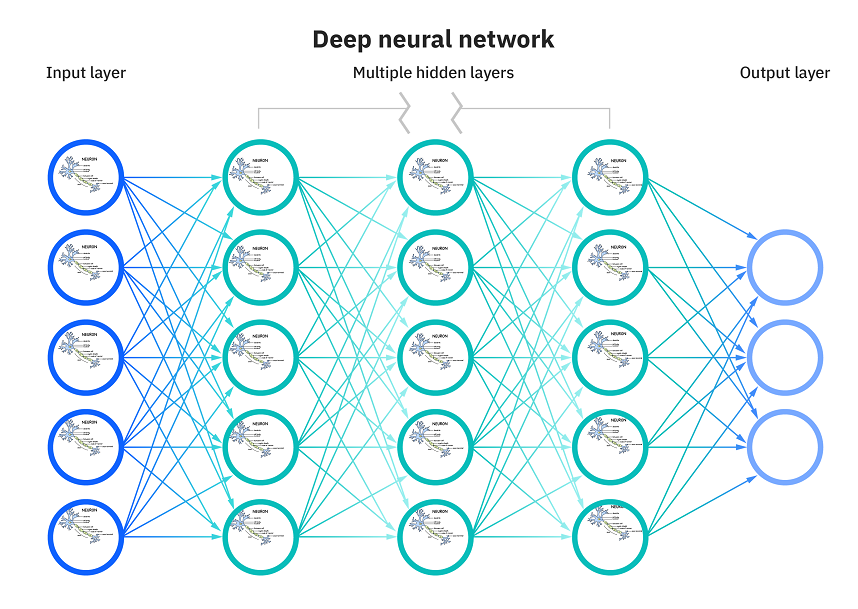

Peeling Back the Layers: Neural Network Structure

Artificial Neural Network

Input Layer

At the heart of every neural network is the input layer. This is where the network receives its raw data. Let's say you want to build a neural network that can identify whether an image contains a cat or a dog. In this case, the input layer would receive the pixel values of the image.

Hidden Layers

Now, we venture into the hidden layers. These intermediate layers are where the real magic happens. Hidden layers transform the raw input data through a series of mathematical operations, learning patterns and features in the process. The number of hidden layers and neurons in each layer varies depending on the complexity of the task at hand. It's like having a team of specialists working together to crack a case.

Output Layer

Finally, we reach the output layer. This layer provides the result or prediction generated by the neural network. In our cat-dog image recognition example, the output layer might signal "cat" or "dog" based on the input data and what the network has learned.

Learning the Ropes: Training Neural Networks

Now that you understand the structure of neural networks, let's talk about training them. You see, neural networks don't come pre-loaded with knowledge; they have to learn from data. This process is similar to how we learn from experience.

Data, Data, and some more Data

The first step in training a neural network is to feed it a ton of data. For our image recognition network, this would involve thousands of labeled images of cats and dogs. The network uses this data to identify patterns and associations between different features in the images.

ANN Forward propagation

Loss and Optimization

Once the network has seen enough data, it needs a way to assess how well it's doing. This is where the loss function comes into play. The loss function calculates the error or the difference between the predicted output and the actual target. The goal of training is to minimize this error. Optimization algorithms, such as gradient descent, are then employed to adjust the network's parameters (weights and biases) to minimize the loss. It's like fine-tuning a musical instrument until it produces the perfect melody.

Backpropagation

At the heart of every neural network is the input layer. This is where the network receives its raw data. Let's say you want to build a neural network that can identify whether an image contains a cat or a dog. In this case, the input layer would receive the pixel values of the image.

ANN Back propagation

Activation Functions: The nonlinearity

ANN Activation Functions

In our journey through neural networks, we can't skip the importance of activation functions. These functions introduce non-linearity to the network, enabling it to model complex relationships in data.

Imagine you're trying to decide whether to go for a run. Your decision depends on various factors like weather, your energy level, and your motivation. Activation functions allow the network to consider all these factors and make a more nuanced decision, just like you would.

FAQs: Answering Your Burning Questions

Conclusion

In this whirlwind tour of neural networks, we've peeled back the layers of their structure, dived into the training process, and explored the critical role of activation functions. These intelligent systems, inspired by the human brain, have revolutionized the world of technology.

The next time you ask your voice assistant a question or enjoy the convenience of personalized recommendations, remember that it's the intricate dance of artificial neurons in a neural network that's making it all happen. Neural networks are the foundation of AI, and understanding their working principle is the key to unlocking the potential of this groundbreaking technology.

So, as we wrap up this exploration, take a moment to appreciate the remarkable world of neural networks, where data becomes knowledge, patterns emerge, and innovation knows no bounds.

The Foundation of Neural Network Working Principle

Attention!

The information provided on this website is produced using AI technologies with minimal human intervention. As such, the data may be incomplete, inaccurate, or contain errors. Remember that AI may make mistakes.

The content is NOT intended to be a substitute for professional advice.

Blogs that you might like

FluxyAI

FluxyAI

Share it on social media.